It's like JSON, but fast and small.

case class Value(i: Int, l: Long, flags: Set[Byte]) derives Pack, Unpack

case class NestedMap(myMap: Map[Int, Seq[Value]]) derives Pack, Unpack

val nestedMap = NestedMap(Map(

1 -> Seq(

Value(1, 2, Set(0, 1, 2)),

Value(3, 4, Set.empty)

),

2 -> Seq.empty,

))81 // 1 element map

a5 6d 79 4d 61 70 // key: str 'myMap'

82 // 2 element map

01 // First key: 1

|- 92 // 2 element array

| |- 83 // 3 element map: Value(...)

| |--- a1 69 01 // key: 'i', value: 1 (int compressed to 2 bytes based on actual value )

| |--- a1 6c 02 // key: 'l', value: 2 (long compressed to 2 bytes based on actual value)

| |--- a5 66 6c 61 67 73 // str: 'f', 'l', 'a', 'g', 's'

| | 93 // 3 element array Set(0, 1, 2)

| | 00 01 02

| |- 83 // 3 element map: Value(3, 4, Set.empty)

| |--- a1 69 03 i = 3

| |--- a1 6c 04 l = 4

| |--- a5 66 6c 61 67 73 'flags' = Set.empty

| |- 90 // empty array

02

|- 90 empty array

What is MessagePack?

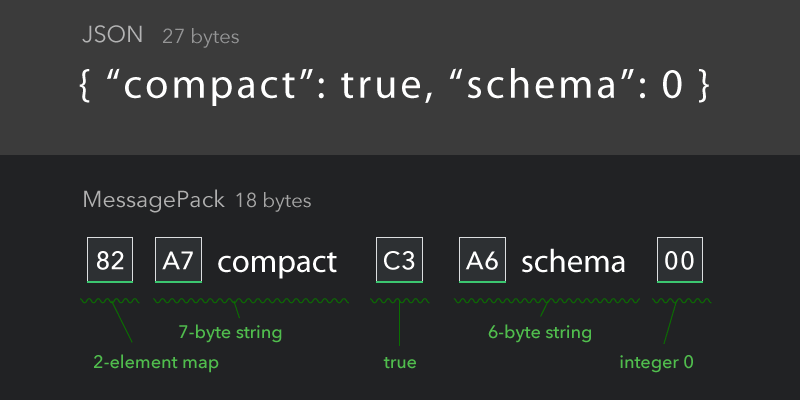

MessagePack is an efficient binary serialization format. It lets you exchange data across systems and languages in a self-descriptive fashion, much like how JSON is used, but in a way that is not terrible for the planet like JSON. A small integer can be encoded in a single byte, and short strings only need a single byte prefix + the original byte array. A MessagePack implementation is available in many languages (See also the list in http://msgpack.org) and thus works as a universal data format.

The goal of spack is to provide a convenient, performant, and typesafe way to serialize and deserialize Scala data.

In addition, MessagePack has isomorphisms with JSON, which means that all JSON can be converted to and from MessagePack payloads (It is not the case, however, that all MessagePack payloads can be losslessly converted to JSON, as MessagePack supports things like heterogeneous Arrays, and non-string Map keys, which JSON does not support).

Much like writing to JSON, a payload serialized using spack will always be a valid MessagePack payload, so even if your data model has evolved, it can be deserialized, inspected, patched or migrated, and used. For working directly with MessagePack payloads, spack provides the Message ADT. If you are familiar with JSON libraries, this is the equivalent of working with the JSON object data type itself, (e.g., play.api.libs.json.JsValue, `zio.json.ast.JSON), where one can programatically traverse the tree, iterate over arrays, extract values, etc.

Only Scala 3 is currently supported because scala3 magnolia is used which requires Mirrors

libraryDependencies ++= "com.kolemannix" %% "spack" % "<version>"case class Foo(count: Int, bars: Seq[String]) derives Pack, Unpack

val foo = Foo(count = 42, Seq("I", "have", "42", "bars"))

val output: Array[Byte] = foo.packToByteArray

val parsed: Either[String, Foo] = Spack.unpack[Foo](output)case class Foo(i: Int, l: Long) derives Pack, Unpack

case class NestedMap(myMap: Map[String, Seq[Foo]]) derives Pack, Unpack

val nestedMap = NestedMap(Map(

"k1" -> Seq(Foo(1, 2), Foo(3, 4)),

"k2" -> Seq.empty,

))

nestedMap.The dependency of my dependency is my

dependencyproblem -Dwight Schrute, probably

You should care about this. Currently the only dependency is msgpack-java, which has no dependencies of its own. TODO paste the classpath here to try and start a trend?

This was primarily an educational project, so I originally implemented the entire protocol from scratch. After a series of benchmarking

and optimizing, I was unable to reach performance parity with the msgpack-java, which has its own fully custom Buffer implementation and

uses sun.misc.Unsafe to avoid bounds checking, along with a host of other optimizations. I've decided to simply provide a clean Scala interface

for working with MessagePack that makes use of the underlying implementation from msgpack-java. My personal implementation can be found in the spack.naive

package.

MessagePack Java is an extremely solid implementation, with a lot of polish and performance optimizations. After considerable time benchmarking and optimizing, I could not get my implementation to match that of msgpack-java for writes. This is because msgpack-java makes use of sun.misc.Unsafe, and is doing raw writes to memory regions without bounds checks, as well as re-using and concatenating small byte buffers, rather than copying on buffer resize. I have replaced the internal protocol implementation with msgpack-java, no need to reinvent a very fast and well-tested wheel, and I am focusing on the Scala features

2/19/23

[info] Benchmark Mode Cnt Score Error Units

[info] FormatBench.naivePackMessage thrpt 3 9582.516 ± 427.727 ops/s

[info] FormatBench.naivePackMessage:·async thrpt NaN ---

[info] FormatBench.packMessage thrpt 3 16048.841 ± 1171.456 ops/s

[info] FormatBench.packMessage:·async thrpt NaN ---

[info] FormatBench.unpackMessage thrpt 3 12712.242 ± 748.861 ops/s

[info] FormatBench.unpackMessage:·async thrpt NaN ---

- Fast protocol impl

- Clear API with tiny surface area

- Clean ADT for

Message - Scala typeclass derivation for ADTs and Case Classes

- Custom type <->

Message<-> Binary

- Custom type <->

- Custom type <->

Binarydirect? Drawing inspiration fromjsoniter-scala, perhaps an implementation straight from custom type to binary to save the intermediary representation overhead - Clean runtime classpath (only msgpack-java, which is dependency free)

- Command-line utility for converting to and from JSON??

- Address deficiencies of the MessagePack protocol

- Extend the MessagePack protocol

- Integrate with other Scala or Java libraries

| format name | Read | Write |

|---|---|---|

| fixmap | [x] | [x] |

| fixarray | [x] | [x] |

| fixstr | [x] | [x] |

| nil | [x] | [x] |

| (never used) | [x] | [x] |

| false | [x] | [x] |

| true | [x] | [x] |

| bin 8 | [x] | [x] |

| bin 16 | [x] | [x] |

| bin 32 | [x] | [x] |

| ext 8 | [x] | [x] |

| ext 16 | [x] | [x] |

| ext 32 | [x] | [x] |

| float 32 | [x] | [x] |

| float 64 | [x] | [x] |

| uint 8 | [x] | [x] |

| uint 16 | [x] | [x] |

| uint 32 | [x] | [x] |

| uint 64 | [x] | [x] |

| int 8 | [x] | [x] |

| int 16 | [x] | [x] |

| int 32 | [x] | [x] |

| int 64 | [x] | [x] |

| fixext 1 | [x] | [x] |

| fixext 2 | [x] | [x] |

| fixext 4 | [x] | [x] |

| fixext 8 | [x] | [x] |

| fixext 16 | [x] | [x] |

| str 8 | [x] | [x] |

| str 16 | [x] | [x] |

| str 32 | [x] | [x] |

| array 16 | [x] | [x] |

| array 32 | [x] | [x] |

| map 16 | [x] | [x] |

| map 32 | [x] | [x] |

| positive fixint | [x] | [x] |

| negative fixint | [x] | [x] |

Repo completeness

- Finish usage readme

- Examples project that depends on core

- Publish snapshots

Correctness assurance

- Test BigInt

- More roundtrip tests for all edge cases using java impl as a guide

- Implement extension types

Profiling results

- Write to one big buffer instead of allocating and concatenating buffers

- Assess ByteArrayOutputStream (+ DataOutputStream wrapper) vs ByteBuffers with resize checks

- Can we reuse a buffer pool for small buffers

- Lots of time utf8 encoding. Can we cache string writes above 128 chars and ArrayCopy them since they'll likely be repetitive Tried this and it did not really help much; not worth the complexity IMO

ADT derivations

- Derive ADTs to/from Message

- The json-iter approach: macro-based direct encoding from ADT to and from message

- Benchmark a handwritten codec for a caseclass vs the current derived, to see if worth writing json-iter style macros

- Pack/unpack sealed traits

- Pack/unpack Options

- Pack/unpack any iterable

Derived vs handwritten write codec. I want to gauge whether its worth the effort to write macros that codgen handwritten codecs

[info] Benchmark Mode Cnt Score Error Units [info] CaseClassBench.derived thrpt 5 782300.824 ± 23288.932 ops/s [info] CaseClassBench.derived:·async thrpt NaN --- [info] CaseClassBench.handwritten thrpt 5 1196017.545 ± 24258.168 ops/s [info] CaseClassBench.handwritten:·async thrpt NaN ---