Teckel is a framework designed to simplify the creation of Apache Spark ETL (Extract, Transform, Load) processes using YAML configuration files. This tool aims to standardize and streamline ETL workflow creation by enabling the definition of data transformations in a declarative, user-friendly format without writing extensive code.

This concept is further developed on my blog: Big Data with Zero Code

- Declarative ETL Configuration: Define your ETL processes with simple YAML files.

- Support for Multiple Data Sources: Easily integrate inputs in CSV, JSON, and Parquet formats.

- Flexible Transformations: Perform joins, aggregations, and selections with clear syntax.

- Spark Compatibility: Leverage the power of Apache Spark for large-scale data processing.

Here's an example of a fully defined ETL configuration using a YAML file:

SelectExample: hereWhereExample: hereGroup ByExample: hereOrder ByExample: hereJoinExample: here

- Apache Spark: Ensure you have Apache Spark installed and properly configured.

- YAML files: Create configuration files specifying your data sources and transformations.

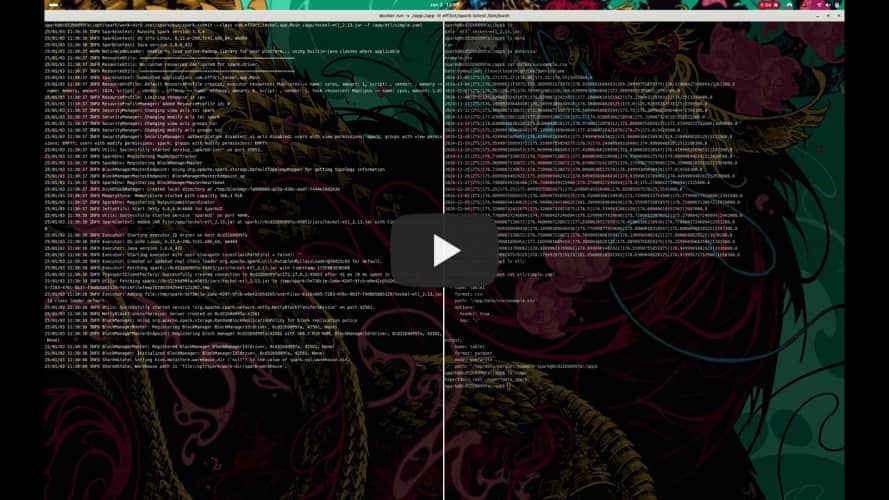

In case of you don't have Apache Spark installed previously, you can deploy an Apache Spark cluster using the following

docker image

eff3ct/spark:latest available in

the eff3ct0/spark-docker GitHub repository.

Clone the Teckel repository and integrate it with your existing Spark setup:

git clone https://github.com/rafafrdz/teckel.git

cd teckelBuild the Teckel ETL CLI into an Uber JAR using the following command:

sbt cli/assemblyThe resulting JAR, teckel-etl_2.13.jar, will be located in the cli/target/scala-2.13/ directory.

Once the teckel-etl_2.13.jaris ready, use it to execute ETL processes on Apache Spark with the following arguments:

-for--file: The path to the ETL file.-cor--console: Run the ETL in the console.

To run the ETL in the console, you can use the following command:

cat << EOF | /opt/spark/bin/spark-submit --class com.eff3ct.teckel.app.Main teckel-etl_2.13.jar -c

input:

- name: table1

format: csv

path: '/path/to/data/file.csv'

options:

header: true

sep: '|'

output:

- name: table1

format: parquet

mode: overwrite

path: '/path/to/output/'

EOFTo run the ETL from a file, you can use the following command:

/opt/spark/bin/spark-submit --class com.eff3ct.teckel.app.Main teckel-etl_2.13.jar -f /path/to/etl/file.yamlImportant

Teckel CLI as dependency / Teckel ETL as framework.

The Teckel CLI is a standalone application that can be used as a dependency in your project. Notice that the uber jar

name is teckel-etl and not teckel-cli or teckel-cli-assembly. This is because

we want to distinguish between the Teckel CLI dependency and the ETL framework.

Check the Integration with Apache Spark documentation for more information.

Teckel can be integrated with Apache Spark easily just adding either the Teckel CLI or Teckel Api as a dependency in your project or using it as a framework in your Apache Spark project.

Check the Integration with Apache Spark documentation for more information.

Contributions to Teckel are welcome. If you'd like to contribute, please fork the repository and create a pull request with your changes.

Teckel is available under the MIT License. See the LICENSE file for more details.

If you have any questions regarding the license, feel free to contact Rafael Fernandez.

For any issues or questions, feel free to open an issue on the GitHub repository.